Triton Operator Documentation

Triton DataCenter (formerly “Triton Enterprise”, “SDC” or “SmartDataCenter”) is a complete cloud management solution for server and network virtualization, operations management, and customer self-service. It is the software that runs Triton Compute Service and can be used to power private and hybrid clouds on customer premises.

This page summarizes the core concepts, terminology, and architecture of Triton to help get Operators started with planning, deployment, and operation of the program. It contains almost no diagrams and is designed to be a quick read so you can move on to the detail of each topic rapidly. Links to the detailed sections are provided where appropriate.

If you are looking for End User documentation on Triton Compute Service, please see the section entitled Triton End User Documentation.

Architecture

A Triton DataCenter installation consists of two (2) or more servers. One server acts as the management server (“head node" or "HN") and the remainder are "compute nodes" (or "CNs") which run the instances.

Triton is the cloud orchestration software that consists of the following components:

- An Operations Portal.

- A public API for provisioning and managing instances (virtual machines).

- A set of private APIs for use by Operators.

- Agent processes to manage communication between head node and compute nodes.

Triton also configures and allocates network resources to instances giving them both internal, intra-instance, and external internet connectivity.

The underlying hypervisor, SmartOS, is a powerful and lightweight container based virtualization layer that natively supports OS virtualization (containers) and hardware virtualization (HVM) for Linux, Windows, and FreeBSD.

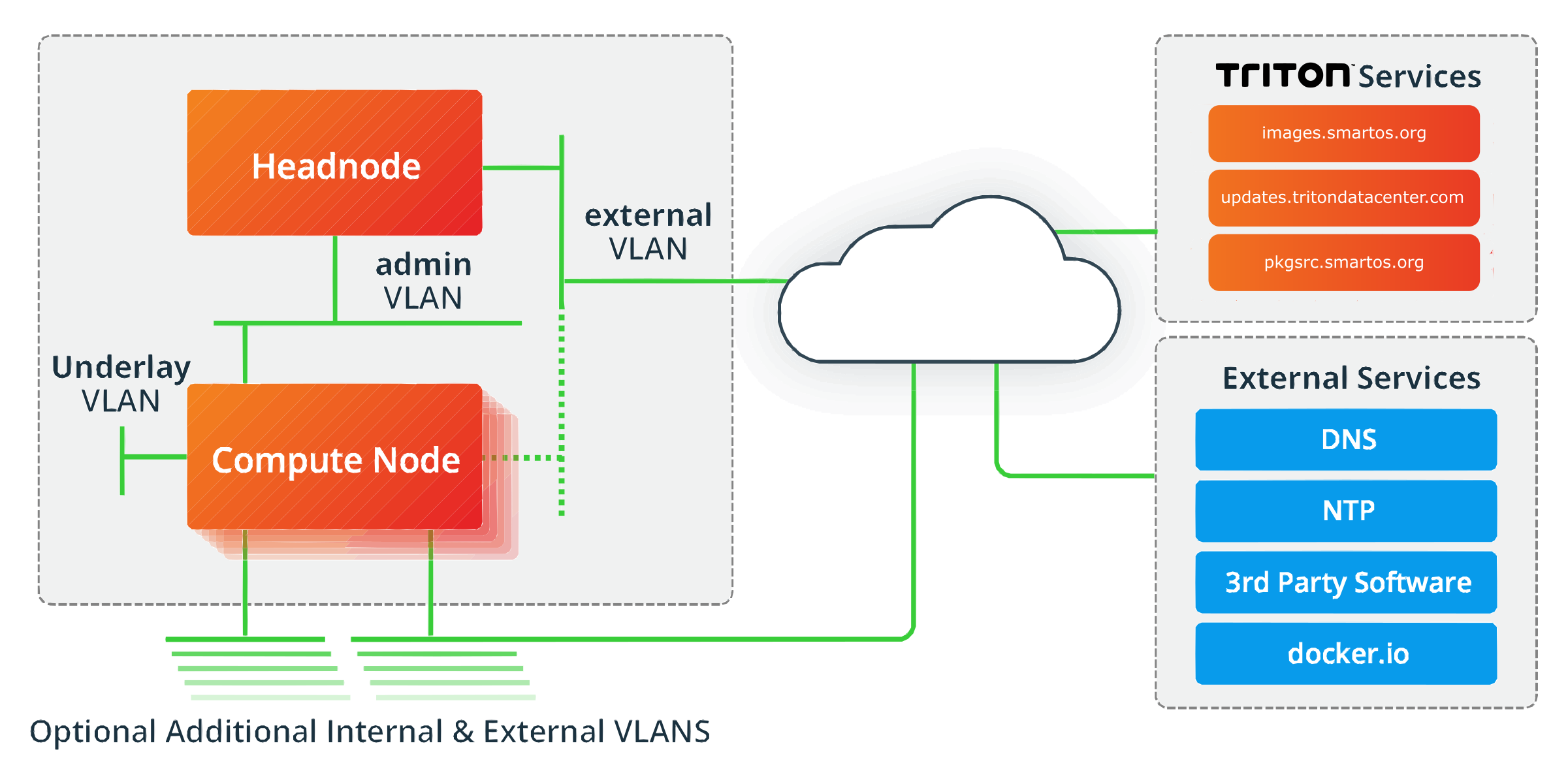

Triton: Operations / Physical View

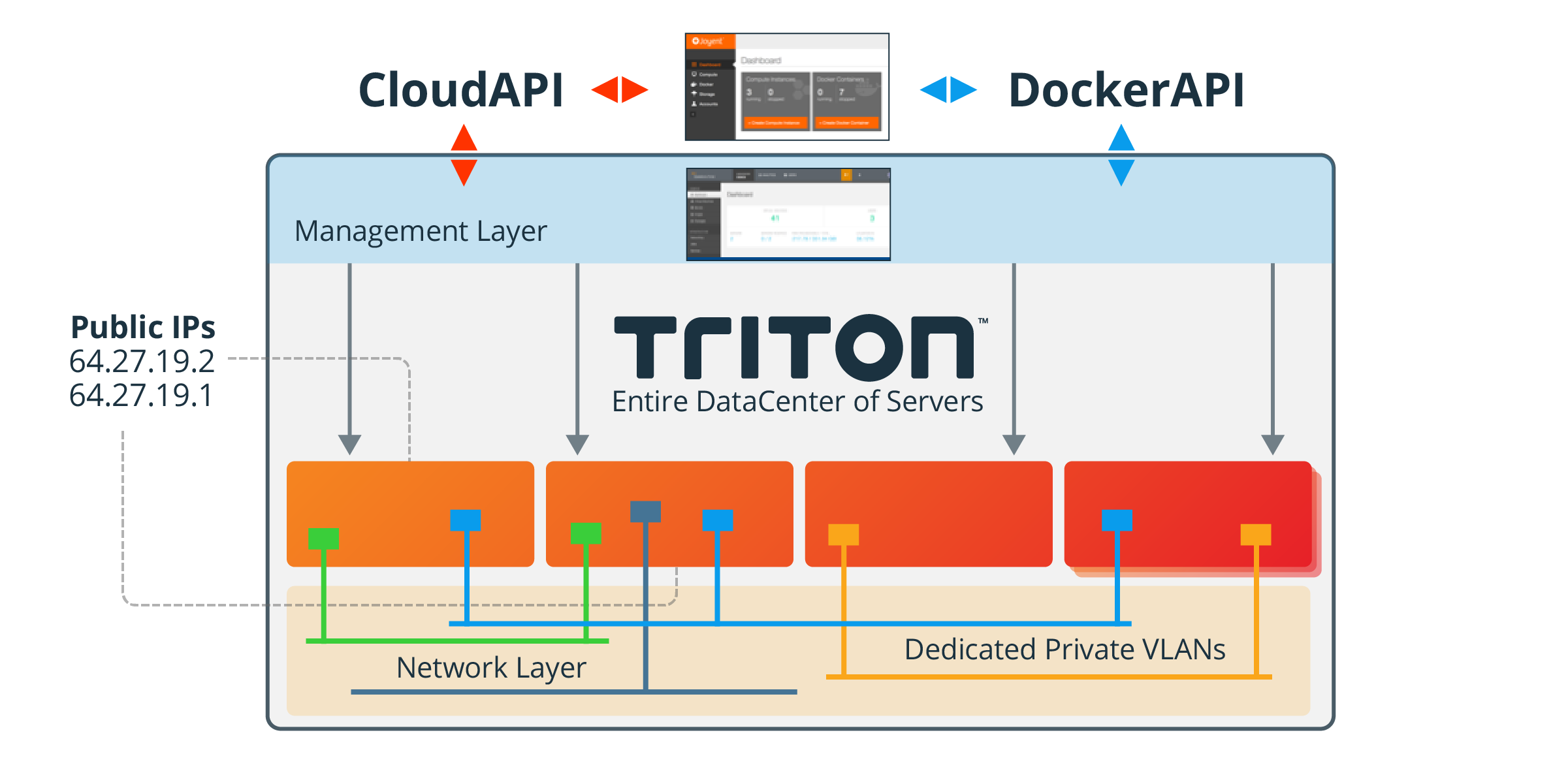

Triton: Logical View

Terminology

- Head node (HN)

- Management server.

- After initial installation it runs the core services, APIs, and is used to PXE boot the compute nodes.

- Compute nodes (CN)

- Hosts for instances

- Containers and hardware virtual machines

- ZFS file system used for performance and reliability

- Images

- Software and configuration to create an instance

- Seed images provided by MNX for non-docker containers

- Docker images for use with docker tooling from docker hub or private registries

- Operators and end users can create custom images

- Packges

- Definition of resources for an instance (RAM, CPU, Disk, etc.)

- Users select an image and package to create an instance

- Logical networks

- Triton mapping of core networks

- Each instance gets one Virtual NIC for each selected Network

- Underlay VLAN

- A layer 2 network provisioned with jumbo frame enabled for support of Triton virtual networking

- Services

- Software deployment mechanism for Triton

- Workflows

- Defined steps to perform tasks in Triton, such as provisioning

- Runs a Job

Core services

Triton uses a service oriented architecture. Each core service is instantiated from an image and works with other services as necessary to manage the instance, user, and networking capabilities of Triton.

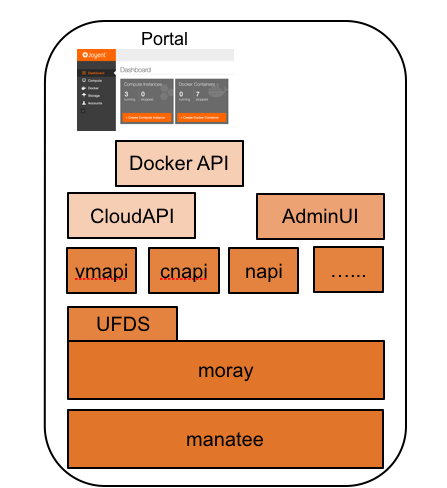

Triton: Core services logical view

The core services perform a variety of roles in support of instance management. They include a single data repository (Manatee), data access/management services, APIs, and communications services as summarized below. Each core service runs in its own infrastructure container running SmartOS and all of them except the Manatee data store are stateless and can operate as multiple redundant instances. (See resilience and continuity documentation for more details.) The core services themselves are managed though the Services API (SAPI) and can easily be upgraded by re-provisioning from updated images.

| Service | Description |

|---|---|

| adminui | The Operator's Management Interface / Operations Portal |

| amon | Altering and monitoring service |

| amonredis | Redis data store for Amon |

| assets | Manages storage of images on the head and compute nodes |

| binder | Internal DNS service for Triton |

| cnapi | Compute node API |

| cns | Container Name Service, automatic and universal DNS for containers and VMs |

| clouadpi | The end user Public API for managing customer instances |

| dhcpd | Manages IP address allocation and creation of boot images for compute nodes |

| fwapi | Firewall API |

| imgapi | Image API |

| manatee | High availability Postgres based data store for Triton |

| moray | Key value data store based on Manatee. Most APIs store their data through Moray |

| napi | Network API |

| nat-User-UUID | Provides NAT access for containers on fabric networks; one per user |

| papi | Package API |

| portolan | Management of VXLAN networking |

| rabbitmq | Inter-server message management |

| redis | Data store used for caching by data by NAPI, AdminUI and VMAPI (Data here is not persistent) |

| sapi | Services API |

| sdc | Triton Tools |

| sdc-docker | Docker endpoint, provides access to docker registrie |

| ufds | Unified Foundational Directory Service, an LDAP implementation built on top of Moray |

| vmapi | Virtual Machine API (management of instances) |

| workflow | Workflow API |

Users

Triton provides for Role based management of infrastructure. The Account owner can create sub-users who are assigned to Roles that will allow them to perform infrastructure administration tasks on behalf of the Account holder. Through the definition of Policies allocated to Roles users can be enabled to perform such tasks as provision instances and manage firewall rules. This capability is described in detail in the section on user management.

Only very basic user information is stored in Triton; this includes name, login, email, phone number, and password.

Every Triton user must have an SSH key in their account. This key is used to authorize access to instances as described in the Instances section below. It is also used to authorize access to the publicly accessible CloudAPI.

APIs

Internal

The Internal APIs are only accessible from the Admin VLAN and are mainly used by the pre-defined workflows during the execution of jobs (provision, destroy, etc). The Operations Portal provides an interface to the internal APIs and is the recommended method of managing Triton and obtaining information.

However, as Operators gain experience and system complexity grows, it is often expedient to use the APIs for reporting, searching and summarizing information. There is also some functionality of Triton that is not available in the Operations Portal and has to be executed through the appropriate API.

APIs are RESTful and can be manipulated using curl commands. Triton also comes with an easy to use Command Line Interface (CLI) for the APIs that simplify their usage and syntax. Each API CLI is a command named sdc-xxxapi (e.g. sdc-vmapi, sdc-cnapi, etc).

Public API

The end user public API is called the CloudAPI. It is an internet accessible RESTful API authenticated using end user credentials (SSH keys). It allows end user to create and manage their own instances using an industry standard interface. MNX also provides a Command Line Interface (CLI) for the CloudAPI written in Node.js which can be installed on any client environment. As with the internal APIs the CLI simplifies the usage and syntax of the API.

Information on installing the CloudAPI CLI and a detailed description of the API is available in apidocs.tritondatacenter.com/cloudapi

Instances (containers and virtual machines)

Triton supports three types of instances:

- Infrastructure containers running container optimized Linux or SmartOS. These containers offer all the services of a full unix host, and are OS-virtualized and share a common kernel with the host server, thus giving bare metal performance and a low memory and disk footprint

- Docker containers running a Linux or SmartOS images.

- Hardware virtual machines (HVM) running separate guest operating systems, including Linux, Windows, and FreeBSD, in KVM or bhyve. These instances do not enjoy the bare-metal performance that container instances do.

Infrastructure containers function as completely isolated virtual servers within the single operating system instance of the host. The technology consolidates multiple sets of application services onto one system and places each into isolated instances. This approach delivers maximum utilization, reduced cost, and provides a wall of separation similar to the protections of separate instances on a single machine.

The container hypervisor enjoys complete visibility into containers, both Docker and infrastructure. This visibility allows the container hypervisor to provide containers with as-needed access to a large pool of available resources, while still providing each instance with minimum guaranteed access to resources based on a pre-established fair share scheduling algorithm. In normal operating conditions, all RAM and CPU resources are fully utilized either directly by applications or for data caching. Triton DataCenter ZFS provides a write-back cache that increases I/O throughput significantly.

Instances of all types have one or more fixed IP addresses that are allocated at the time the instance is provisioned or when additional Network Interface Connectors (NICs) are added.

Access to (non-Windows) instances is initially by SSH only (for Windows, use RDP). As described above, each user of Triton must have at least one SSH key added to their account before provisioning any instances. The keys on the account are used to permit access to instances in the following ways:

- For infrastructure containers running SmartOS, a Triton technology called SmartLogin validates SSH key based access attempts directly against the key held in Triton. Keys are not stored in the instance itself although users have the option to do this if they so chose. Thus, any key stored on the Triton user account can be used to access an infrastructure container running SmartOS even if the key is added after the instance is provisioned.

- For HVM based Linux and FreeBSD instances, the SSH keys on the account are copied to the instance at the time it is provisioned. Keys added after the instance is provisioned will not be copied down (although they can be manually added to the instance's

/root/.ssh/authorized_keysfile). - For HVM based Windows instances, a password will be generated at instance creation time that can then be used to access the instance. This password can be viewed in AdminUI or via a call to

sdc-vmadmorsdc-vmapi.

See Managing instance access for more information.

Networking

Triton provides networking capability for instances for both internal, intra instance communication, and external internet access. Networks are configured in Triton as Logical Networks, which map to the configuration of networks in the core networking switches and routers of your organization. Networks used by Triton must be dedicated to Triton and should not have external devices or servers attached to them other than network switches and routers.

Logical Network definitions in Triton must match the core network configuration in every respect:

- Definition of the Subnet in CIDR format.

- VLAN ID (for tagged VLANs).

- Range of provision-able IP's.

- Gateway IP address (if applicable).

- DNS Resolver IP addresses (if applicable).

- Routing information for connectivity to other Logical Networks.

- Often required if multiple internal VLANs are configured.

When an instance is provisioned the end user selects one or more logical networks to use with their instance (up to a maximum of 32). The instance will be created with a Virtual Network Interface (VNIC) for each selected network. Each VNIC will have a static IP address. VNIC's can be added and removed from the instance at any time after provisioning.

See Configuring networks for more information.

Firewall

Triton provides a built in firewall capability known as Cloud Firewall which can be managed via both the internal APIs and CloudAPI. End users can define a single set of firewall rules to be applied to any or all of their instances. Rules can be enabled, disabled, and reconfigured dynamically without rebooting an instance. Rule syntax closely follows the syntax used for iptables.

See Firewall for more information on the MNX Cloud Firewall.