Compute node, image, and package traits

This page describes how to create, manage, configure, and delete compute node, image, and package traits in Triton, and how they affect provisioning.

What is a trait?

Traits are used to provide information to Triton to use in determining which compute node an instance should be provisioned on. At provision time, Triton reads the traits assigned to the package and image to be provisioned and then searches for compute nodes that have the same traits.

For a provision to succeed there must be at least one compute node that has ALL the traits assigned to it that are present in the create instance request. In other words if the package has a trait and the image has a trait there must be a compute node that has both of those traits.

Traits can have single values (Strings or Booleans) or arrays of values. Array traits provide a match for any value in the array. It is not necessary to match all values in an Array. This a single value trait on a package can find a match in an Array trait on a Compute Node, and vice versa. There are a number of examples of this below.

The traits mechanism

Traits can be associated with compute nodes, images, and packages.

Why traits?

Traits enable you to exercise a level of control over your environment by creating rules for certain packages. Some Example use of traits are as follows:

- Define a special set of High I/O packages that only can be provisioned on nodes marked as having SSD drives. By using traits, you can ensure that only the High I/O packages are provisioned on your expensive SSD hardware.

- Restrict a compute node to one application or to a group of applications.

- Mark a compute node as "out of bounds" for provisioning; for example, to be used for internal development or operational use.

- Mark an image as requiring a certain type of hardware. For example, you can use a trait to limit instances provisioned with a certain image to only run on a certain class of machine.

- Mark an image as being able to only be provisioned with certain packages. For example, restricting a load balancer to only run on packages with a "highcpu" trait set.

Trait forms and formatting

Traits are defined in JSON objects and come in two formats, single value or an array values, as shown below. All trait'able objects can have multiple traits in any combination of single value and arrays. Values can be either strings or booleans.

Single Value

A simple key value pair.

Examples:

{

"ssd": true

}

{

"env": "prod"

}Array

A key with an array of values.

{

“hw”: ["richmond-a”, "richmond-b”, "mantis-shrimp"]

}Multiple Traits

{

"ssd": true,

“hw”: ["richmond-a”, "richmond-b”, "mantis-shrimp"]

}Additional Notes on traits:

- Compute nodes, packages and images can contain zero, one, or many traits.

- These various trait formats can be combined to provide fine grained controls on requirements.

- Array traits match on any one value in the array. Thus

{ "key": "A" }Will match to

{ "key": [ "A", "B"] }Equally when matching 2 array traits there only needs to be one value matching in both arrays

{ "key": ["A","C"] }Will match to

{ "key": [ "C", "B"] }

Further Examples:

- Looking for a CN traited for having ssd cache and reserved for a application "hello world".

This CN will not match. The CN does satisfy the ssd trait but does satisfy the application trait.

// Package: { "ssd": true, “app”: "hello world” } // Compute Node: { "ssd": true, “app”: “email” }This CN will match

// Package: { "ssd": true, “hw”: ["richmond-a”, "richmond-b”, "mantis-shrimp"], “app”: “hello world” } // Compute Node: { "ssd": true, "hw": "richmond-a", "app": "hello world" } - Any failure to match traits will make a CN disqualified for provisioning purposes. This includes the case where there are no traits on one side and traits on the other. e.g,

// Package: {} // Compute Node: { "ssd": true }

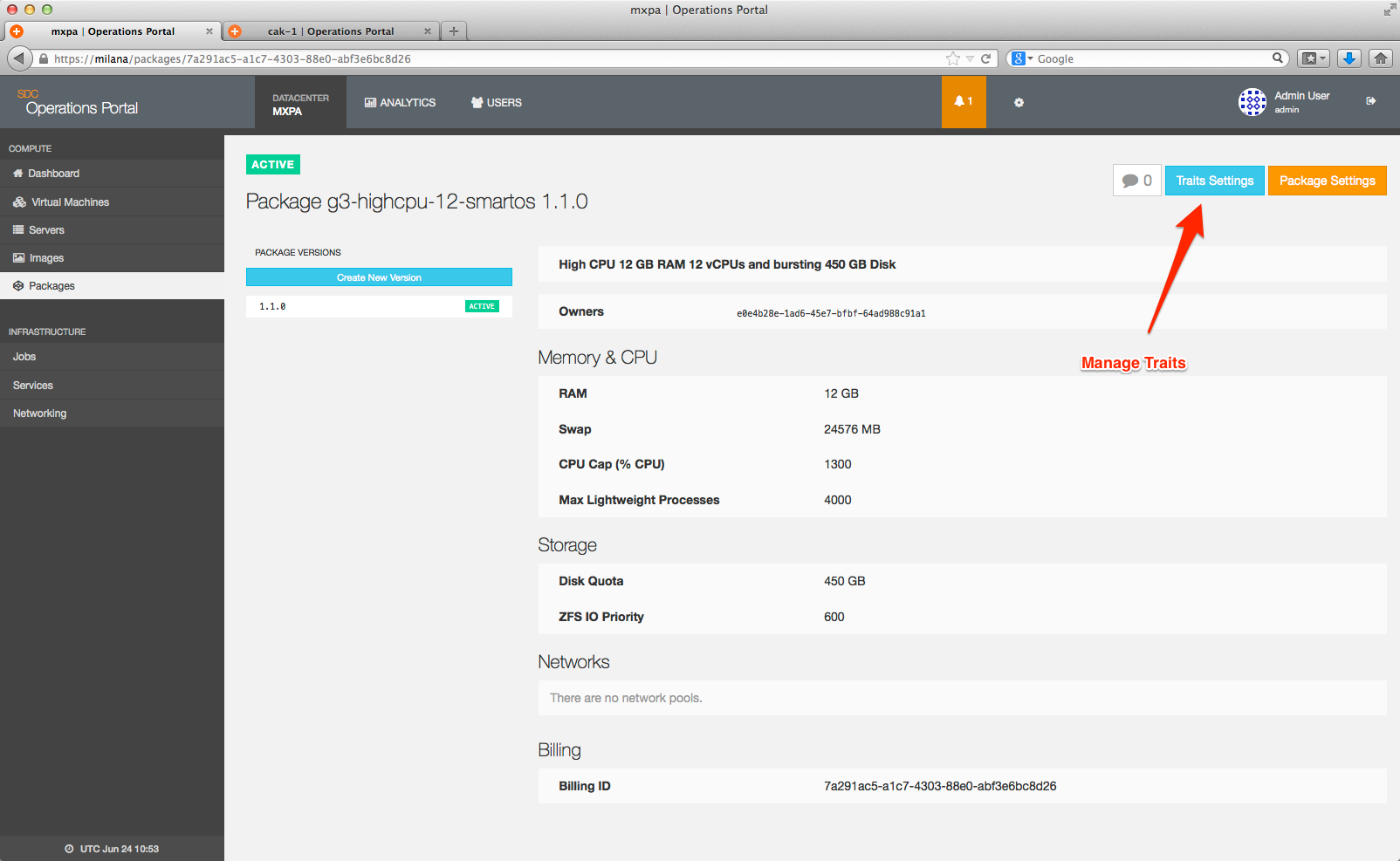

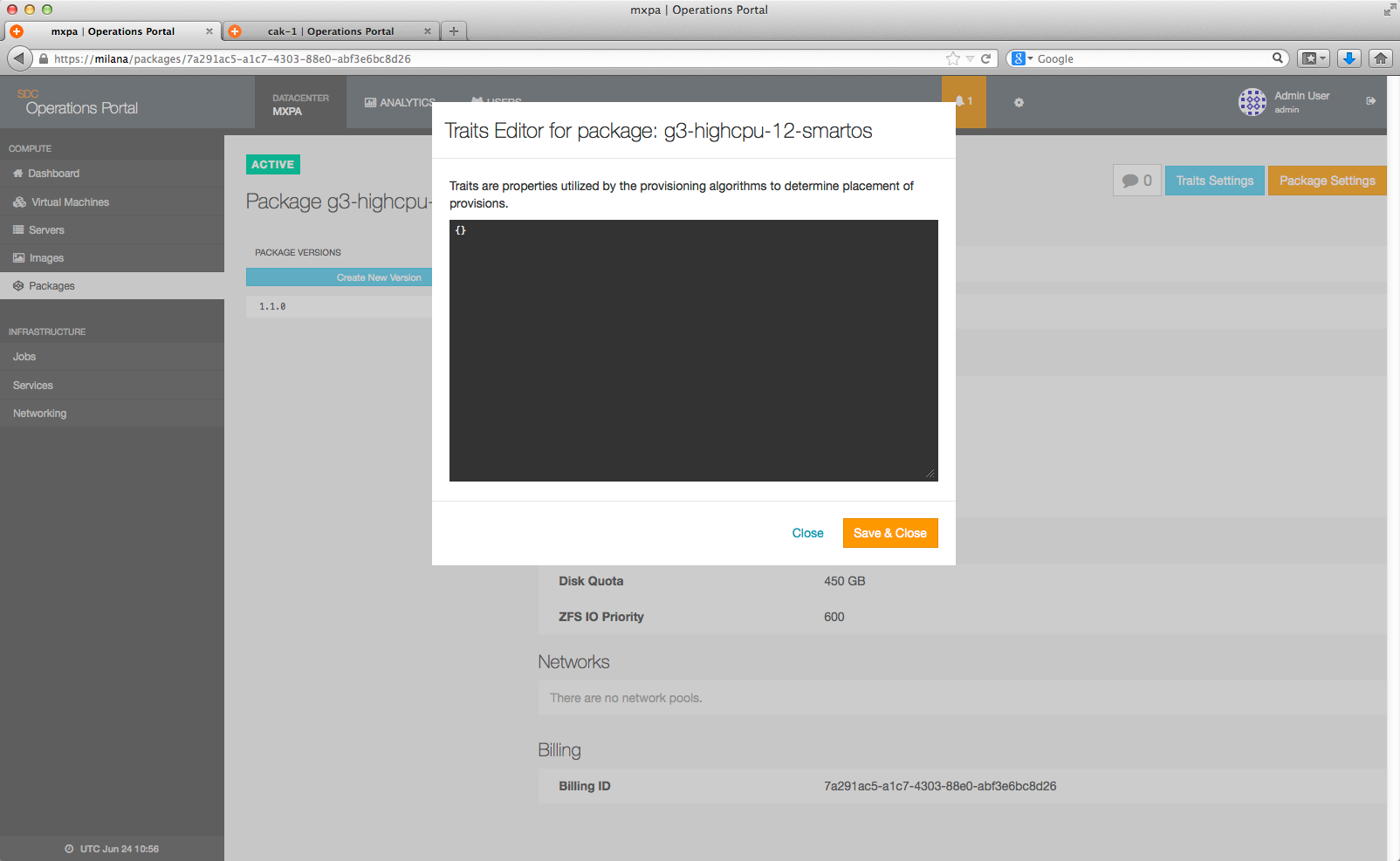

Package traits

Package traits are managed by going to the Package Detail page using the Operations Portal web interface.

Package traits are assigned in the following format:

{

"ssd": true

}For example:

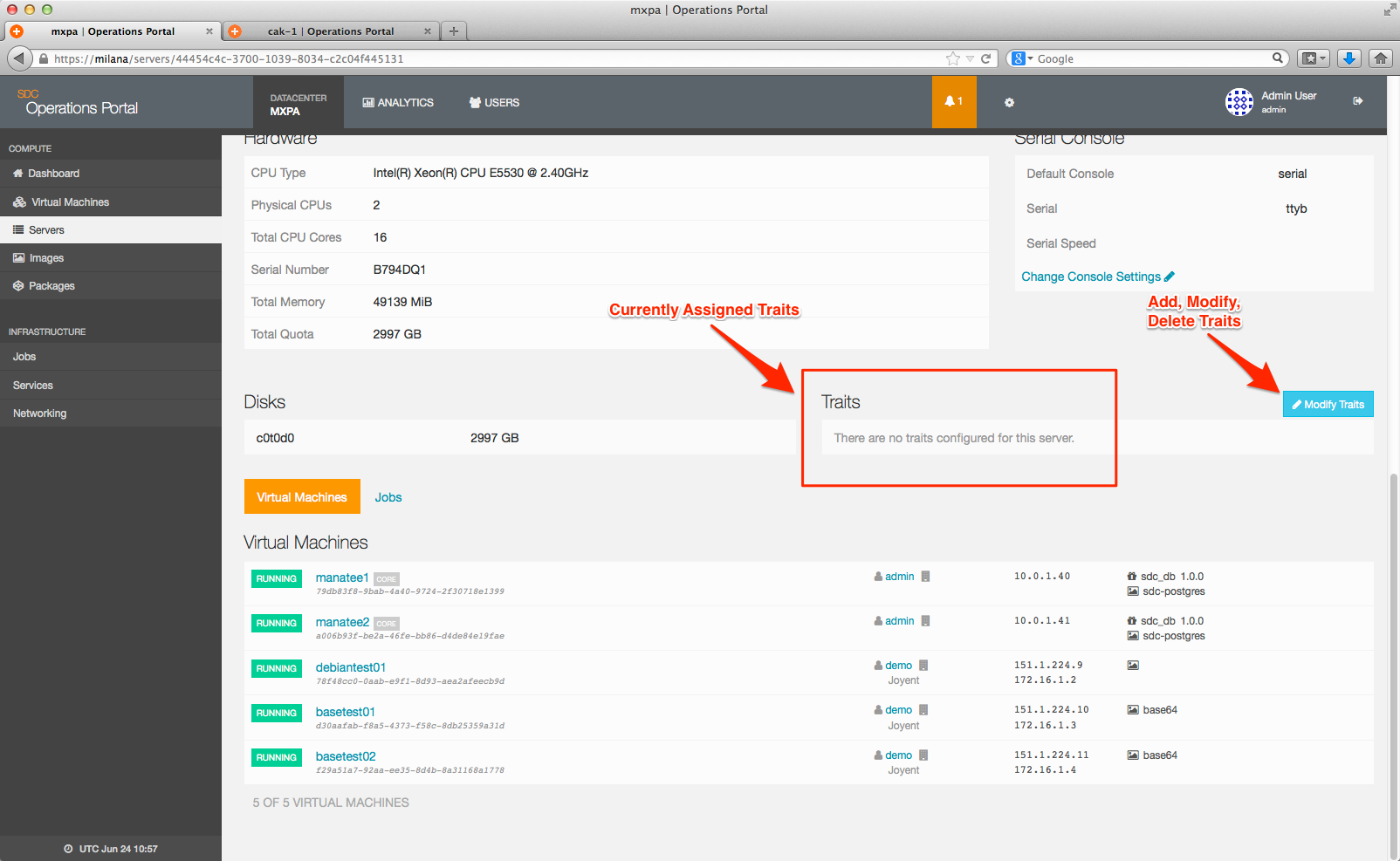

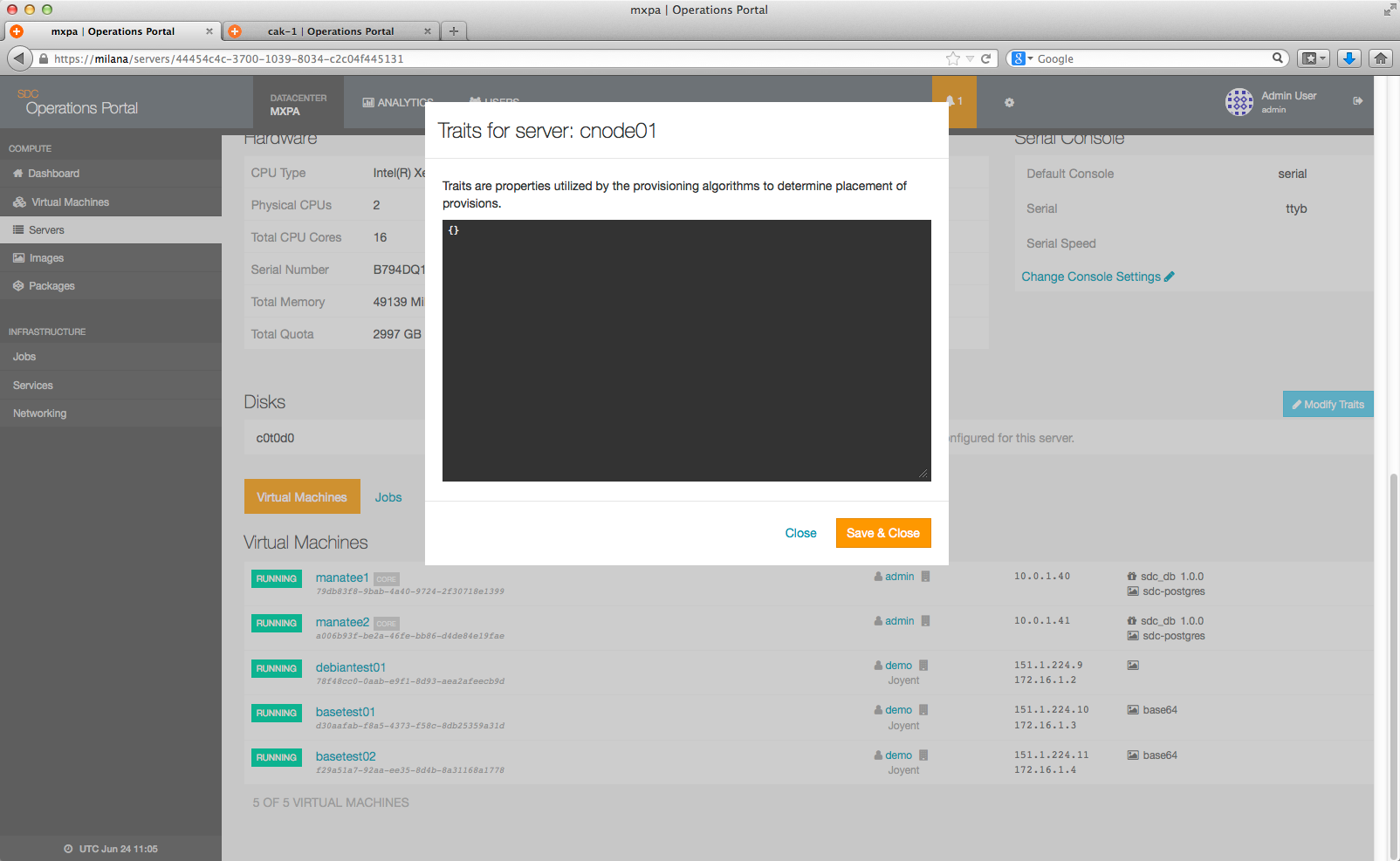

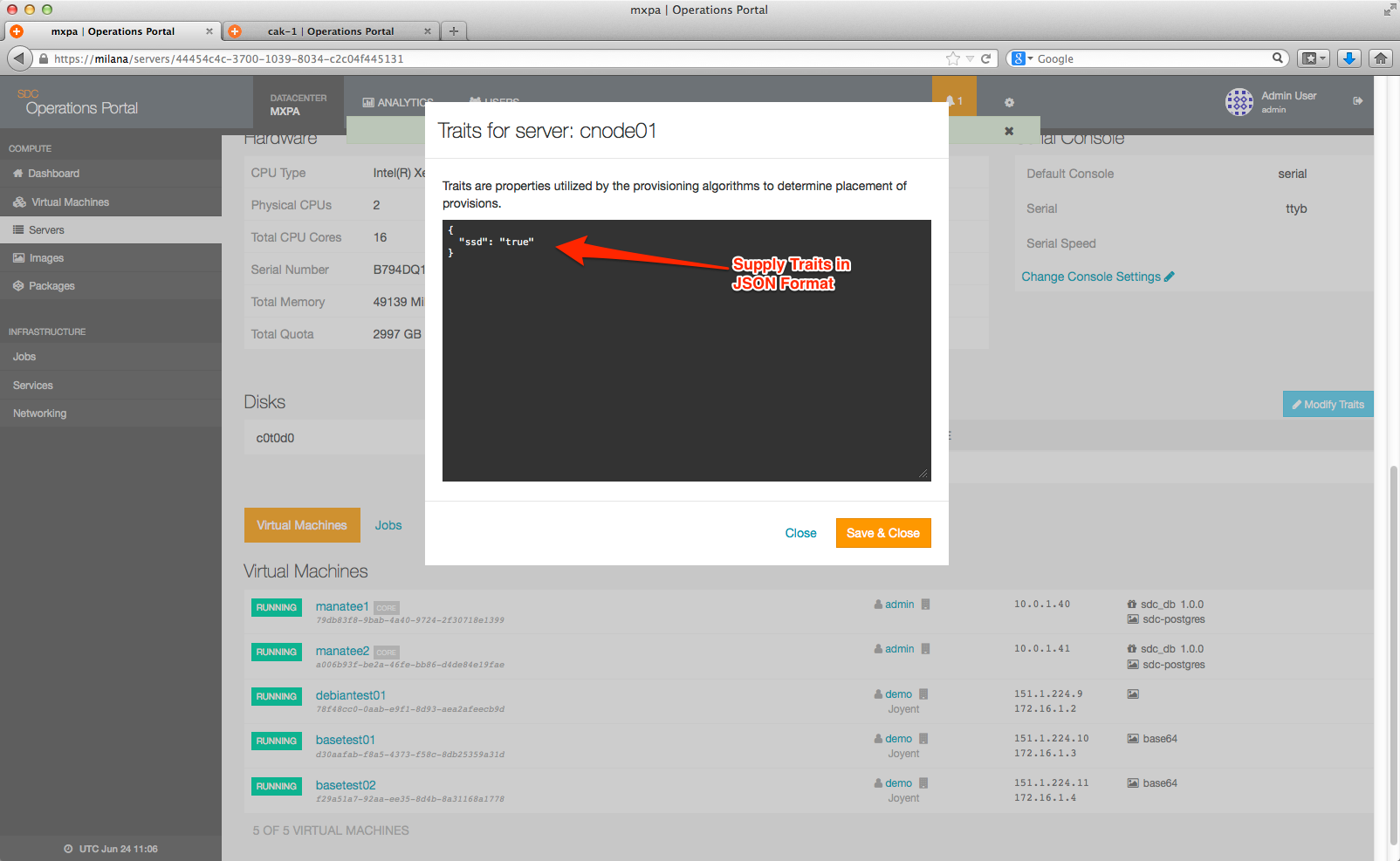

Compute node traits

Compute Node Traits are managed by going to the Server Detail page using the Operations Portal web interface.

To add, modify, or delete compute node traits, simply click on the Modify Traits link.

Compute node traits are assigned in the following format:

{

"ssd": true

}

For example:

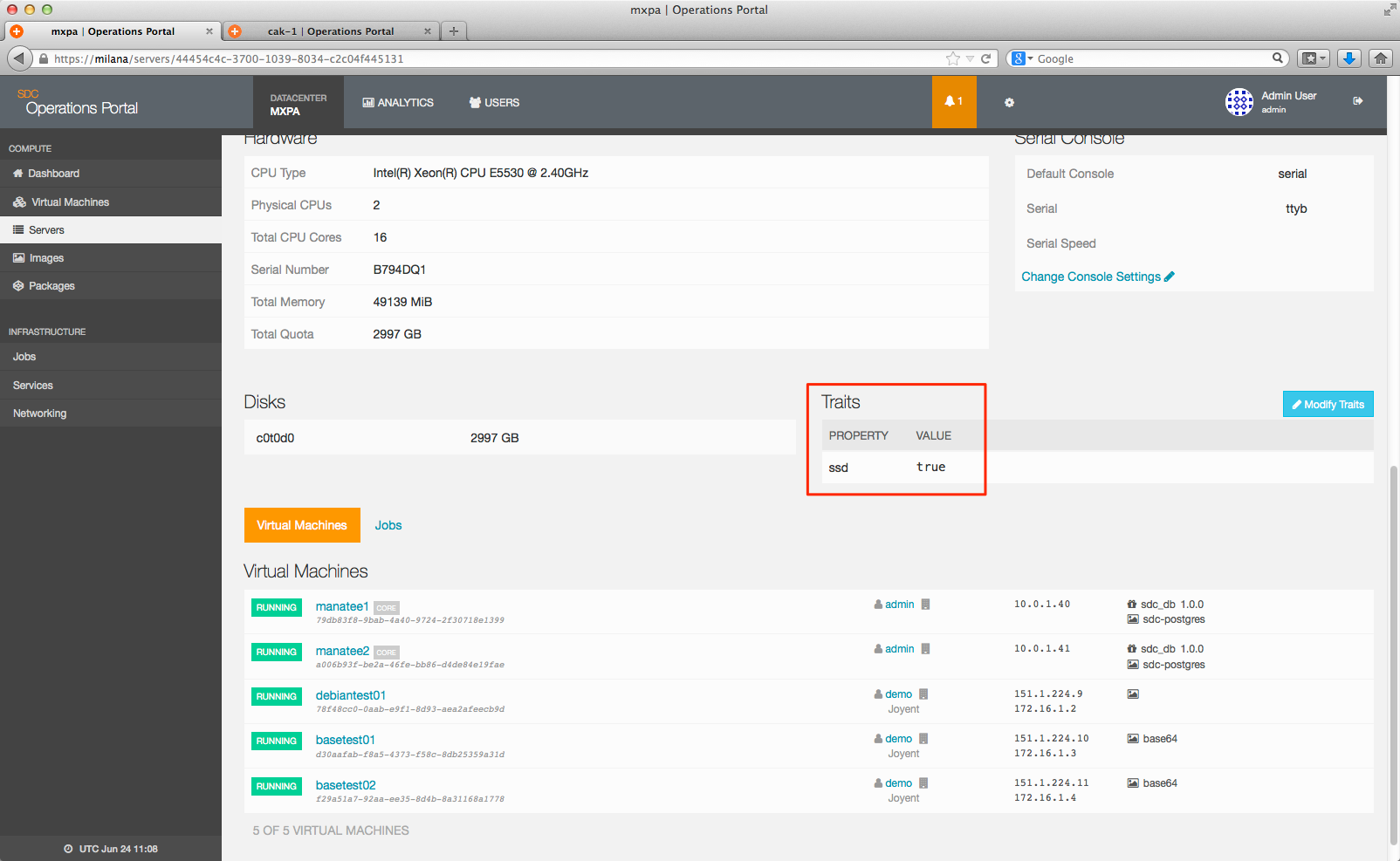

When you leave the editor, it will now show as a trait on the server detail page:

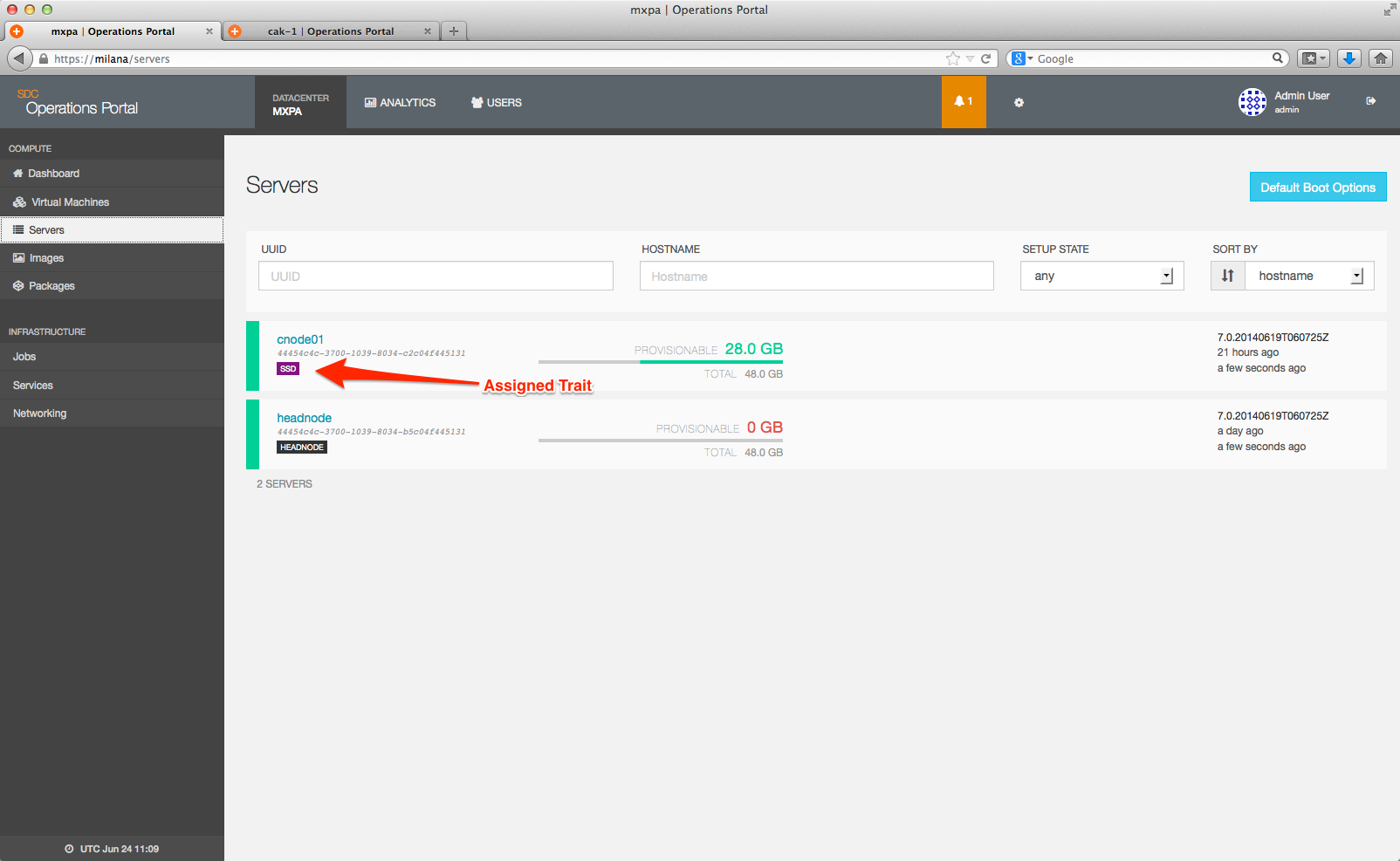

Once a compute node trait is assigned, it will appear as an additional data piece on the main server page:

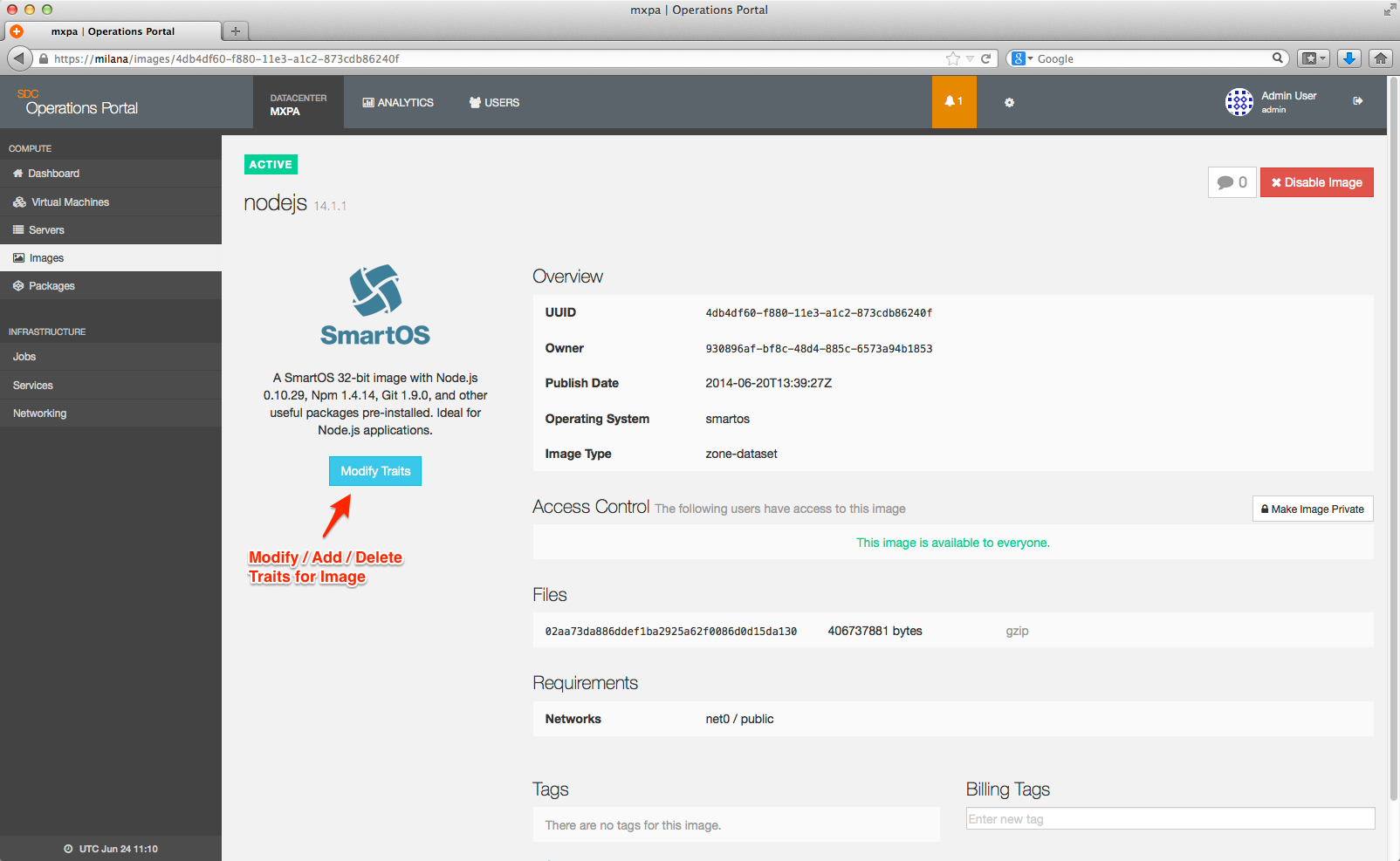

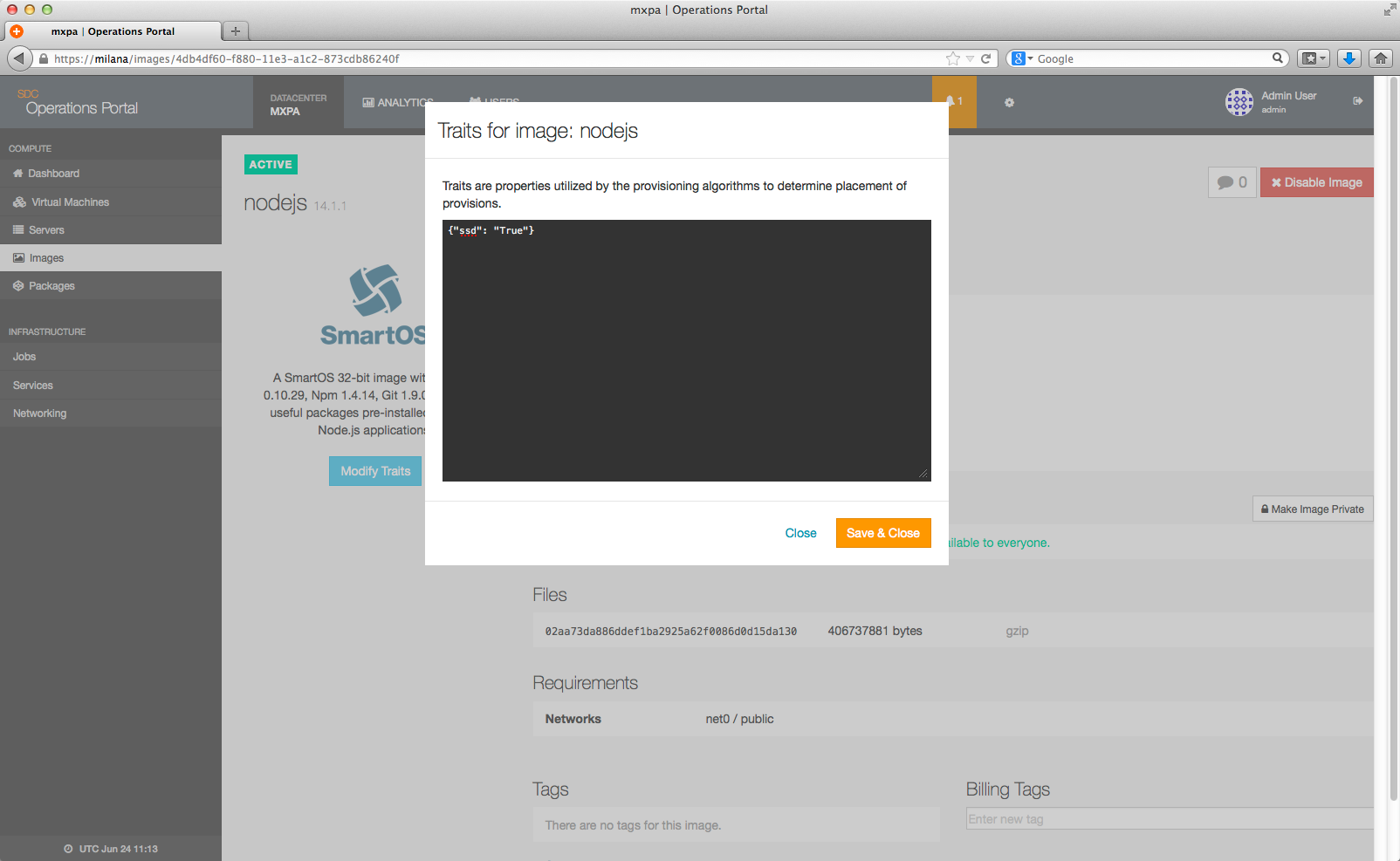

Image traits

Image traits are managed by going to the Image Detail page using the Operations Portal web interface.

Image traits are assigned in the following format:

{

"ssd": true

}For example; to assign the above tag to the Node.js Image to only permit this image to be provisioned using hardware (servers) and packages that also have the ssd trait set, you can set the trait:

Locality hints

Under many circumstances it is desirable to have a new instance placed near or far from existing instances. For example, instances containing two replicating databases probably should be kept far from each other, in case a server or rack loses power. Or consider a webhead and a cache -- keeping a webapp in the same rack as memcache provides better performance.

Specifying locality hints is optional. By default Triton tries to keep a customer's instances on separate racks or servers for high-availability reasons. However, a provisioning request can specify one or more instances for Triton to try to allocate near or far from.

This hint will try to allocate a server near one instance, and far from the other three. Note that allocating a new instance far from other instances take precedence over near instances, since availability is usually more important than performance:

"locality": {

"near": "803e6c05-7c89-464f-b8f7-37aa99ee3ead",

"far": ["53e93593-6c4b-4268-963f-32633f526548",

"a48514a8-95ec-4c2e-a874-63c332a7c8bc",

"1988fa44-b849-449f-abf0-ab0f198d862e"]

}UUIDs provided should be the ids of machines belonging to you.

Both near and far are also optional; you can provide just one if desired. Lastly, if there's only a single UUID entry in an array, you can omit the array and provide the UUID string directly as the value to a near/far key.

Note that the locality is determined from the Server UUID and the Rack location of each server. Each server is automatically assigned a UUID. However by default the rack metadata for each server is blank and will need to be populated by your administrators in order to gain the full benefit of locality hints.

Locality hints strict option

In addition to "near" or "far" you can specify "strict" as true or false. strict = true will cause the provision to fail if "near" and "far" cannot be satisfied. strict = false allows the provision to succeed even if "near" and "far" are not met.

"locality": {

"near": "803e6c05-7c89-464f-b8f7-37aa99ee3ead",

"far": ["53e93593-6c4b-4268-963f-32633f526548",

"a48514a8-95ec-4c2e-a874-63c332a7c8bc",

"1988fa44-b849-449f-abf0-ab0f198d862e"],

"strict": false

}The combined effect of "near", "far" and "Strict" is implemented as follows.

- Strict = false

- NEAR - Use Same CN, else use same rack, else anywhere

- FAR - Use different rack, else use different CN, else same CN

- Strict = true

- NEAR - Use Same CN, else use Same Rack, else FAIL!

- FAR - Use Different Rack, else use different CN, else FAIL!

Rack and owner

Additionally, Triton will attempt to provision customers away from other instances they currently own, and in different racks. This is done in order to try and prevent multiple instances from being clustered on the same host or on hosts in the same rack that may share a single point of failure. Please note that this is not a hard rule; that is Triton will attempt to provision customers away from other instances they own and on separate racks, but in the event this cannot be done the provision will still go through.

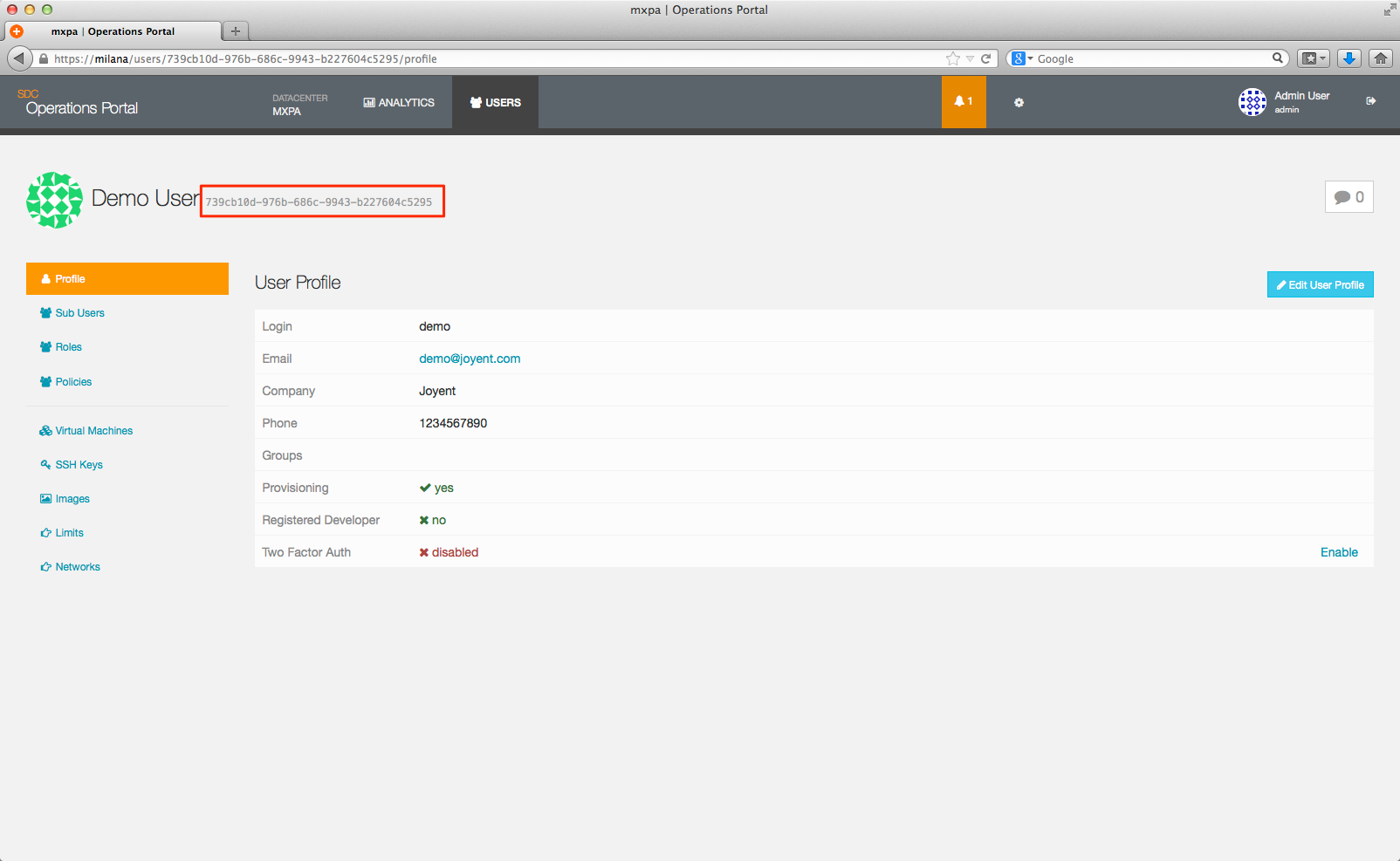

Customer UUID

The customer UUID is the value associated with the customer's account in UFDS.

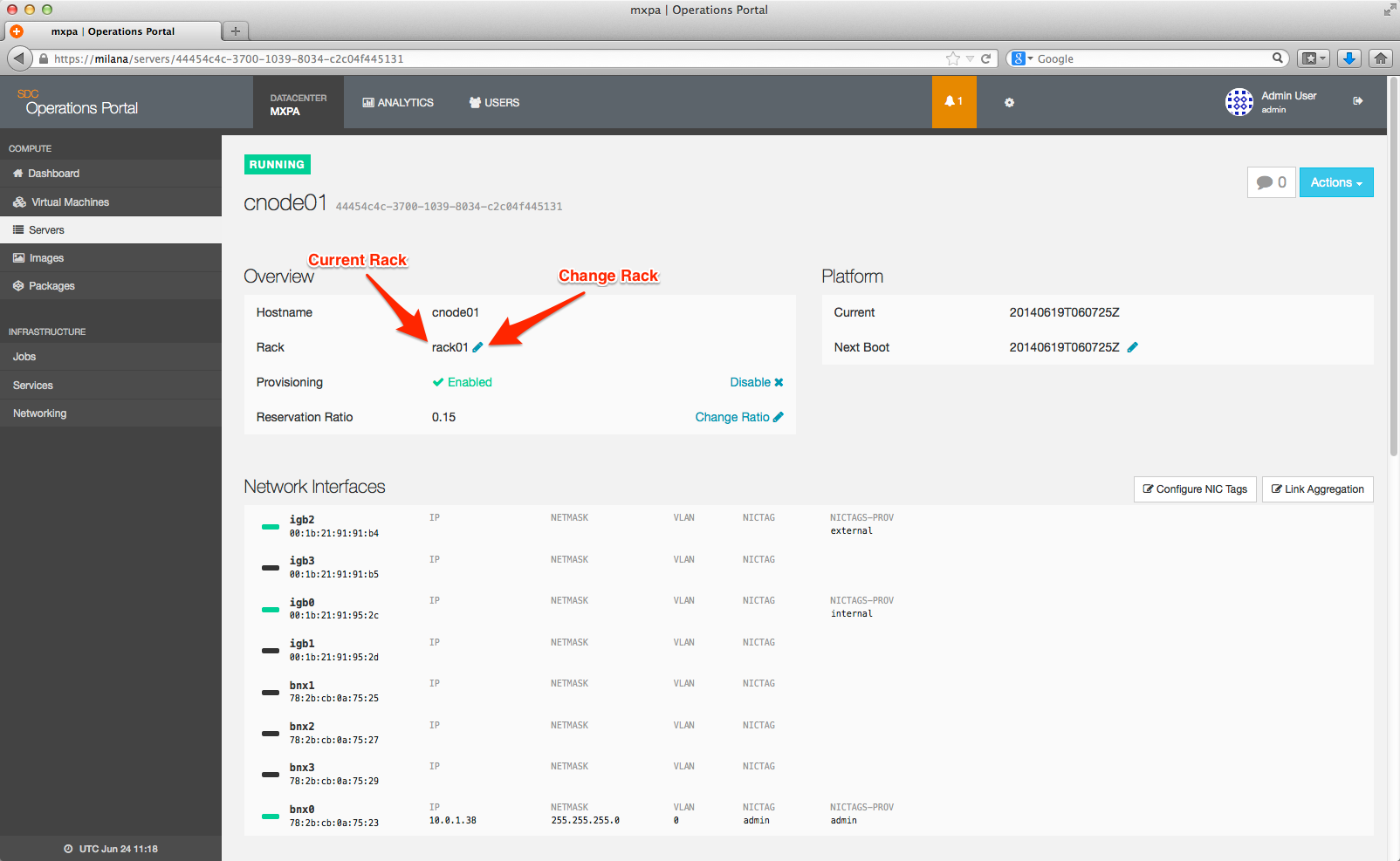

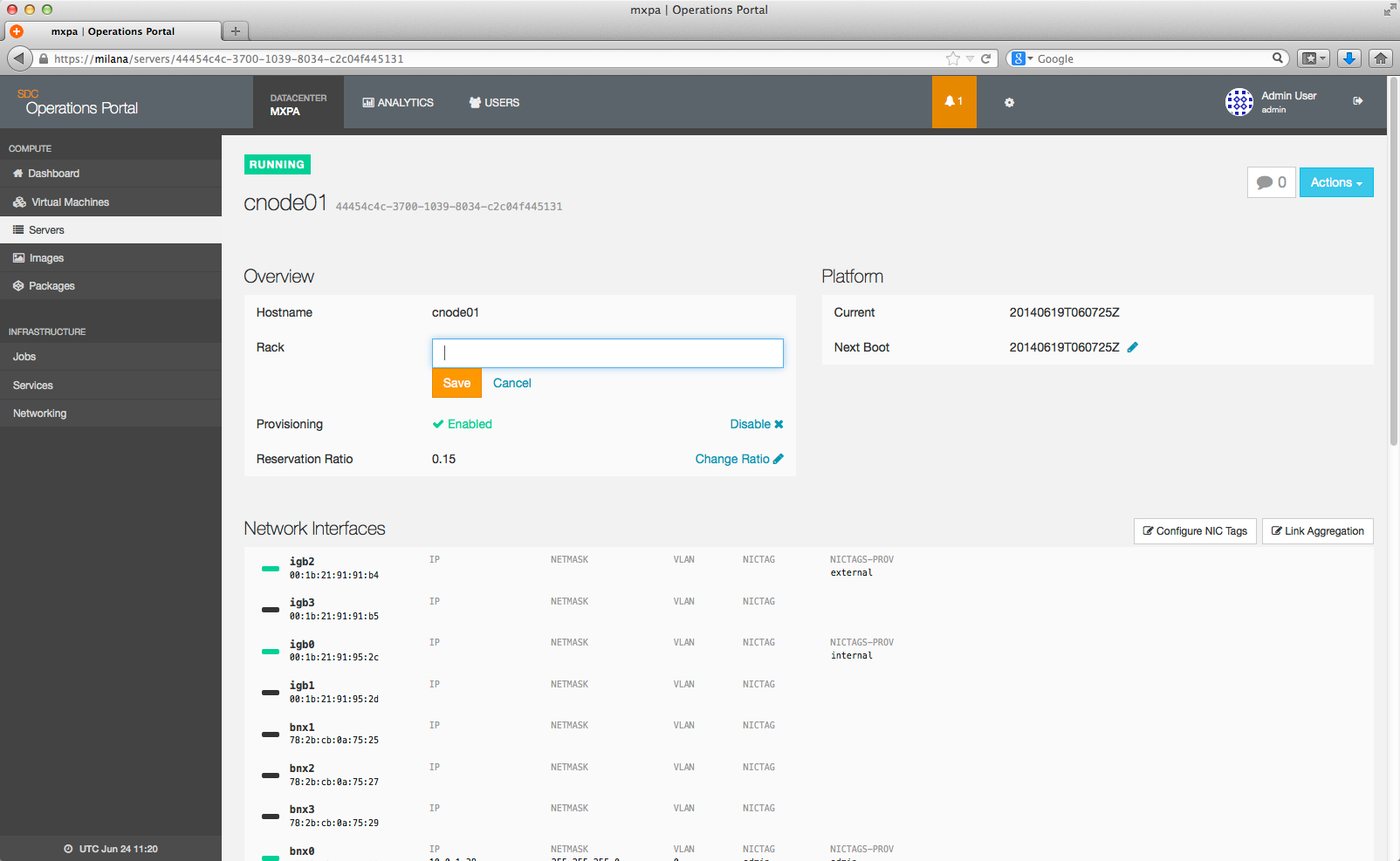

Rack ID

The Rack Data is provided by the operator through the Operations Portal:

Clicking the pencil to the right of the name assigned to the Rack will bring up an edit box which will allow you to change the name of the rack.

In this way, you can add a new rack name or change an existing rack.

Traits best practices / examples

As an example, the following traits are used in Triton DataCenter:

- (None): A lack of trait means the CN is available for general provisioning.

- ssd: Designates a system for SSD (High IO) packages, value is "true".

- inventory: Designates an empty system as "held inventory", that is, empty but available to supplement general provisioning by removal of the trait.

- rma: A system in need of hardware repair or refresh, value is used to hold a ticket identifier for the maintenance/repair required.

- maint: A system scheduled for maintenance in the future. Value of the trait is the date of the maintenance or reboot.

- internal: Designates a node set aside for special internal use of some type, value is a short description of its use. Example: "internal": "NFS Server", or "internal": "PKGSRC Development".

- eol: System is to be removed from service never to return perhaps for lease returns, hardware refresh, etc. Value should be an array with values indicating the schedule and the ticket (if applicable).

Current "internal" values used are:

- "internal": "Manta Node"

- "internal": "Net Ops"

- "internal": "NFS Server"

- "internal": "PKGSRC Development"

EOL (end of life) trait example. This is used internally for two purposes; first, Triton will never find a package that matches eol, so no instances will be provisioned to this server. Secondly, OPS can scan the traits through CNAPI to quickly identify EOL eligible hardware and determine the associated JIRA describing the decommissioning steps required.

44454c4c-4c00-104e-8032-b7c04f315131 7LN21Q1 {

"eol": [

"DFS9",

"SYSOPS-6549"

]

}